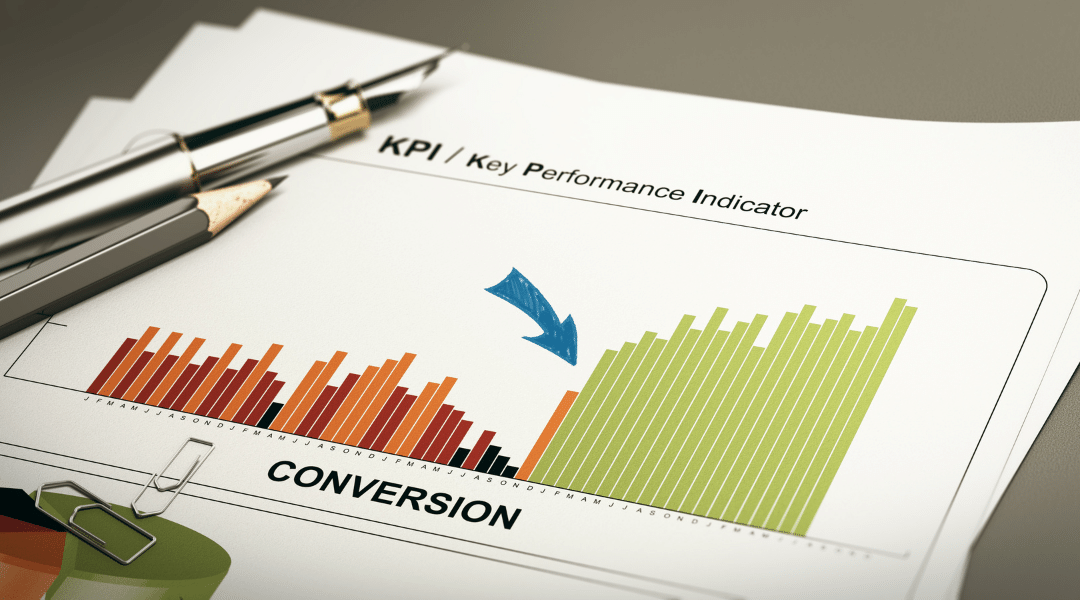

Effective A/B Testing: Boosting Conversion Rates

In the ever-evolving landscape of digital marketing, one thing remains constant: the pursuit of higher conversion rates. Whether you’re running an e-commerce site, a SaaS platform, or a content-driven blog, the key to success often lies in understanding your audience and optimizing your website or app accordingly. This is where A/B testing comes into play as a powerful tool for boosting conversion rates.

What is A/B Testing?

A/B testing, also known as split testing, is a method that allows you to compare two versions of a webpage or app screen to determine which one performs better in terms of conversion. It involves dividing your audience into two groups: one group experiences version A (the control), while the other sees version B (the variation). By analyzing the performance data, you can make informed decisions to improve your conversion rates.

A/B testing, often referred to as split testing, is a method used by digital marketers and website/app developers to assess and enhance the performance of a webpage or app screen. At its core, A/B testing is a scientific and data-driven approach to improving user engagement and conversion rates.

The Scientific Method Applied

A/B testing isn’t just a marketing tactic; it’s a systematic process grounded in the scientific method. Here’s a breakdown of how it works:

- Hypothesis Formulation: It all begins with a hypothesis—a specific change or alteration you believe will improve the performance of a webpage or app screen. This change could relate to anything on the page, from the color of a button to the wording of a headline. Your hypothesis represents your educated guess about what will resonate better with your audience.

- Control and Variation: In an A/B test, you create two versions of the page or screen: the control (version A) and the variation (version B). The control is left unchanged, serving as the benchmark against which you’ll measure the impact of your hypothesis.

- Randomized Split: Your audience is then divided randomly into two groups. One group sees version A (the control), while the other group encounters version B (the variation). This randomization ensures that your test results aren’t skewed by factors like user demographics or behavior.

- Data Collection: Both versions of the page are exposed to real users, and their interactions are meticulously tracked and recorded. This data includes metrics like click-through rates, conversion rates, bounce rates, and other relevant user actions.

- Statistical Analysis: Once sufficient data is collected, it’s subjected to rigorous statistical analysis. The goal is to determine whether the variation (version B) outperforms the control (version A) in a statistically significant way. This means the observed differences are not mere chance but reflect real user preferences.

- Decision-Making: If the variation proves to be the winner—meaning it yields better user engagement or higher conversion rates—then it becomes the new default. The changes outlined in your hypothesis are implemented across the board. If there’s no significant difference, you may need to refine your hypothesis and conduct further tests.

Why A/B Testing Matters

A/B testing matters because it replaces guesswork and gut feelings with concrete data-driven decisions. It empowers businesses and marketers to fine-tune their digital assets based on how real users respond. By rigorously testing changes, you can systematically optimize your website or app for better user experiences, increased conversions, and ultimately, improved business outcomes.

In summary, A/B testing is a methodical approach to web and app optimization. It involves forming hypotheses, creating controlled experiments, collecting data, and using statistical analysis to determine which changes resonate best with your audience. It’s a powerful tool in the digital marketer’s toolkit, providing a scientific basis for refining and enhancing online experiences.

The Science Behind A/B Testing

A/B testing is rooted in the scientific method. It starts with a hypothesis: a specific change you believe will improve user engagement or conversion. This could be anything from altering the color of a call-to-action button to rewriting a product description. Once the hypothesis is formulated, you implement the change in version B while keeping version A (the original) unchanged.

A/B testing is more than just a marketing tactic; it’s a method rooted in the scientific process. At its core, it follows the principles of experimentation and data analysis, mirroring the scientific method. Here’s a deeper look at the scientific aspects of A/B testing:

1. Hypothesis Development

The scientific process begins with a hypothesis—a clear and testable statement that outlines what you expect to happen as a result of your experiment. In A/B testing, your hypothesis specifies the change you believe will improve user engagement or conversion rates. It’s essentially your educated guess about what will resonate better with your audience.

2. Controlled Experimentation

A fundamental tenet of science is conducting controlled experiments to isolate variables and draw meaningful conclusions. In A/B testing, you create two versions of a webpage or app screen: the control (version A) and the variation (version B). The control remains unchanged and serves as the baseline, allowing you to measure the impact of your hypothesis accurately.

3. Randomization

Randomization is a crucial scientific principle that ensures fairness and eliminates bias in an experiment. In A/B testing, your audience is divided randomly into two groups. This random assignment ensures that any differences in performance between version A and version B aren’t influenced by factors like user demographics, location, or behavior.

4. Data Collection

The scientific process relies on data collection to quantify and analyze outcomes. In A/B testing, both versions of the webpage or app screen are exposed to real users, and their interactions are carefully tracked and recorded. This data includes metrics such as click-through rates, conversion rates, bounce rates, and other user actions.

5. Statistical Analysis

Statistical analysis is a hallmark of the scientific method, and it’s central to A/B testing. The goal of statistical analysis is to determine whether the observed differences in user behavior between the control and variation are statistically significant. In other words, are the changes you see in the data likely due to your alterations, or could they be random fluctuations?

6. Null Hypothesis Testing

In A/B testing, you typically have a null hypothesis and an alternative hypothesis. The null hypothesis assumes that there is no significant difference between the control and the variation, while the alternative hypothesis posits that there is a meaningful difference. Statistical analysis helps you either reject the null hypothesis in favor of the alternative or fail to reject it, based on the data.

7. Informed Decision-Making

A/B testing doesn’t stop at data analysis. It’s about making informed decisions. If the data indicates that the variation (version B) outperforms the control (version A) in a statistically significant way, you implement the changes outlined in your hypothesis. This data-driven approach replaces guesswork with evidence-based decisions.

8. Iterative Process

Science is an iterative process of testing, refining hypotheses, and conducting further experiments. Similarly, A/B testing is an ongoing process. As user behavior and preferences evolve, you continuously refine your digital assets to ensure they deliver optimal results.

In conclusion, A/B testing embraces the scientific method to improve digital experiences. It involves forming hypotheses, conducting controlled experiments, collecting and analyzing data, and making informed decisions based on statistical analysis. By adhering to these scientific principles, businesses and marketers can systematically optimize their websites and apps, ultimately leading to better user engagement and increased conversion rates.

Elements to Test

A/B testing is highly versatile and can be applied to various elements on your website or app, including:

1. Headlines and Copy

Testing different headlines, product descriptions, or ad copy can reveal which messaging resonates most with your audience.

2. Images and Videos

Visual elements, such as images and videos, can have a significant impact on user engagement. Test different media types and placements to find the winning combination.

3. Call-to-Action (CTA) Buttons

The color, size, placement, and wording of CTA buttons can influence click-through and conversion rates.

4. Forms

Optimizing the length and layout of forms, as well as the number of form fields, can reduce friction in the conversion process.

5. Pricing and Discounts

For e-commerce sites, tweaking pricing strategies or offering different discount structures can affect purchase decisions.

6. Page Layout

Experimenting with the overall layout and design of a page can lead to improved user experience and conversion rates.

Best Practices for Effective A/B Testing

To ensure your A/B testing efforts yield meaningful results, consider these best practices:

1. Clearly Define Goals

Set specific goals for your A/B tests. Are you aiming to increase sign-ups, sales, or click-through rates? Having clear objectives will guide your testing strategy.

2. Test One Variable at a Time

Isolate the variable you want to test. Changing multiple elements simultaneously can make it challenging to identify what caused a particular outcome.

3. Gather Sufficient Data

Ensure your sample size is statistically significant. Larger sample sizes provide more reliable results. Tools like Google Optimize and Optimizely can help with this.

4. Be Patient

A/B testing requires patience. Run tests for a sufficient duration to account for daily and weekly variations in user behavior.

5. Analyze Results

Use analytics tools to evaluate the data objectively. Look for statistically significant differences between the control (A) and the variation (B).

6. Implement the Winning Variation

Once a winning variation is identified, implement it as the new default on your site or app.

Continuous Improvement

A/B testing isn’t a one-time endeavor; it’s an ongoing process of continuous improvement. As user behavior and preferences evolve, so should your website or app. Regularly revisit your A/B testing strategy to keep your conversion rates on an upward trajectory.

In conclusion, effective A/B testing is a cornerstone of successful digital marketing. By applying the scientific method to your website or app optimization efforts, you can unlock insights that lead to higher conversion rates. Remember that there’s always room for improvement, and A/B testing is your compass on the journey to conversion rate optimization.